CPU-Centric Architecture Is Evolving into Data-Centric Composable Architecture

The recent boom in AI and real-time big data analytics applications has displaced the convention of centering computing power on a CPU and popularized a heterogeneous computing method across CPUs, GPUs, NPUs, and DPUs. This shift has placed higher requirements on memory capacity and bandwidth, which traditional CPU-centric architectures cannot meet.

Trends

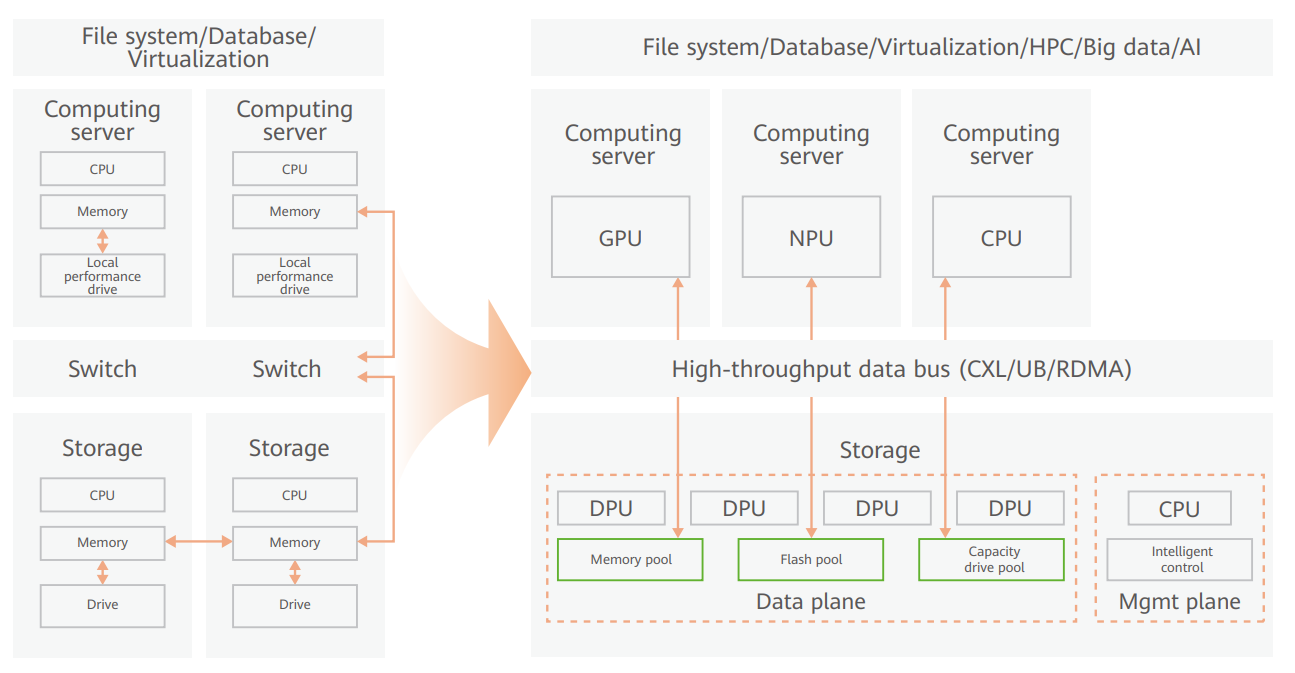

CPU-centric server architecture is evolving into data-centric composable architecture

CPU-centered computing alone is now insufficient for increasingly diverse applications that demand quick real-time data processing. Therefore, a heterogeneous computing approach represented by GPU is emerging.

New computing hardware has seen a boost in hot data processing efficiency of I/O-intensive applications, but causes extra memory access pressure.

Current levels of capacity and bandwidth of local memory cannot meet the requirements for data processing.

In 2019, Intel launched the open interconnection standard, Compute Express Link (CXL). The new memory-semantic bus running on the CXL enables quick access to external memory and memory capacity expansion, making it possible to decouple memory from CPUs and allowing external high-speed storage devices and heterogeneous computing resources to form a memory pool.

The decoupling extends to the CPU-centric server architecture which is evolving into a data-centric composable architecture. Computing, memory, and storage resources from different computing units can be combined on demand, and the heterogeneous computing can directly access memory and storage resources through the high-speed bus.

The new server-based architecture has revolutionized storage positioning, which will no longer be limited to managing disks, but will also incorporate in-memory storage in the future.

At the Flash Memory Summit 2023, MemVerge, Samsung, XConn, and H3 released 2 TB CXL memory pooling systems suited for AI, and also the South Korean company Panmnesia demonstrated its 6 TB CXL memory pooling system.

Figure 1: CPU-centric server architecture evolving into data-centric composable architecture

CPU-centric storage architecture is evolving into data-centric composable architecture

In the future, applications such as AI and big data will require higher performance and lower latency, and CPU performance growth may slow down. With the development of composable server architecture, storage architecture will also evolve into data-centric composable architecture to greatly improve the performance of storage systems. Various processors (CPUs and DPUs), memory pools, flash pools, and capacity disk pools of the storage system will be interconnected through new data buses. Data can then be directly stored in memory or flash memory after accessing the storage system, avoiding slow data access due to poor CPU performance.

Suggestions

Keep pace with the evolution of server and storage architectures, make timely adjustments, and seek opportunities from new storage

Data-centric architectures will be needed to cope with the sharp increase in data processing requirements. Enterprises should keep pace with the evolution of hardware in data centers, make timely adjustments, and build the optimal server and storage architectures to lay a solid data foundation for service development.

Learn more about Huawei Storage and subscribe to this blog to get notifications of all the latest posts.

Disclaimer: Any views and/or opinions expressed in this post by individual authors or contributors are their personal views and/or opinions and do not necessarily reflect the views and/or opinions of Huawei Technologies.

Leave a Comment