The Hidden Cost of AI Contact Centers

In recent years AI has become a standard feature of most contact center platforms. It is used in a variety of functions such as the chatbots, translation, Automatic-Speech-Recognition, Text-To-Speech conversion, case summarization.

There are 3 main reasons why the adoption of AI in the contact center is so profound:

- It improves the customer experience: GenAI can be used to power the chatbot so it uses natural language and provides a meaningful response.

- It makes contact-center agents more efficient: AI can be used to offer agent response options such as client background, intent and emotion, and case summarization.

- It reduces the cost of the contact center: AI can help reduce the number of agents, shorten the training time, and predict staffing requirements, and so on.

Because there are certain aspects of using AI that are often ignored or overlooked.

So, what are they?

- Data security: Using a cloud based LLM means that potentially sensitive data is transmitted over the Internet, and processed and stored by a third party.

- Latency & infrastructure: Depending on the backend infrastructure and the reliability of the LLM provider, latency can occur which, especially in voice and video GenAI, can result in a poor customer experience.

- Legal or PR risks: an unmonitored chatbot, which uses a cloud-based third-party LLM, can potentially provide an unwanted response, which could be negatively perceived by chat, voice, or video bot users.

- Uncontrollable cost of tokens: The use of a third-party LLM is not free. Enterprises pay the LLM provider based on the number of interactions. In particular this last point, the uncontrollable cost of tokens, can become a real issue. Let me explain: when a client decides to use the chatbot to communicate with a vendor, the chatbot will contact the 3rd party LLM to process the request, and formulate the response.

The LLM will first break up the prompt in words and characters, and each word or character becomes a token. The tokens are then analyzed and a response is formulated and sent to the chatbot, which will present the response to the client. The response also consists of tokens. The longer the prompt (request) the more tokens, and the longer the response the more tokens. The third party will then charge the vendor based on the number of tokens used per day/week/month. Different LLM’s use different cost per tokens.

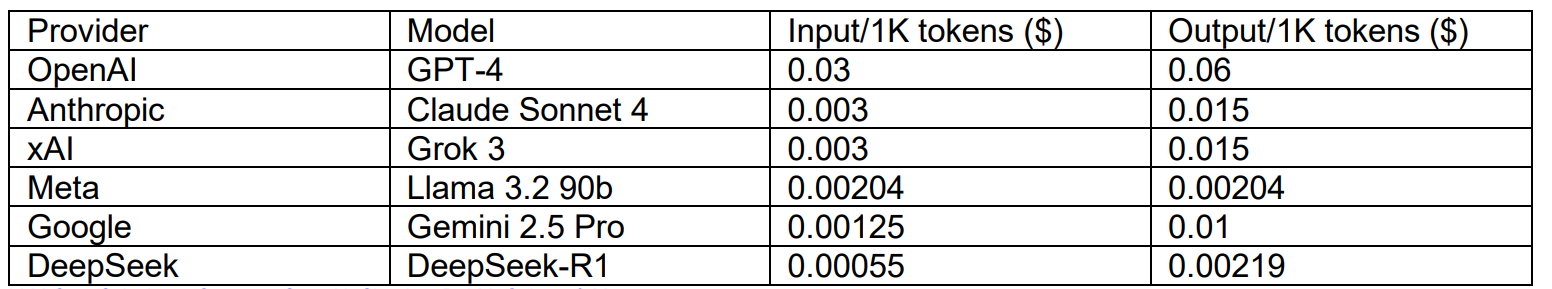

Here’s a summary of some of the more popular LLM’s:

A typical voice-bot conversation can use a 1000 (input + output) tokens or more. Imagine if a company has 100.000 voice-bot interactions per day, and each interaction uses 1000 tokens, if the average cost per 1K input/output tokens is US$0.02, the monthly cost of using the LLM would be 100.000 * 1000/1000 * 30 * $0.02 = $60.000 per month.

An additional concern is that the number of chat-, voice-, or video-bot interactions is impossible to control. If a vendor is suddenly mentioned in the media, or has initiated a marketing campaign, many clients might simultaneously decide to contact the vendor to ask questions. If the number of interactions doubles, the LLM cost will also double.

If the client is not satisfied with the response from the bot, the client can decide to talk to an agent instead. Since the agent also uses the LLM for ASR/TTS, case summarization, sentiment/intent recognition, and so on, this will add even more to the cost of using the third-party LLM.

There are very few options to overcome this problem:

1. The providers can make the use of their LLM free of charge (unlikely)

2. Limit the number of words when using the bot (not very customer friendly)

3. Develop your own LLM (complicated and time consuming)

4. Use a third-party LLM in a private cloud or on-premise.

It seems that only this last option is a viable alternative to avoid paying third-party LLM providers for the use of their LLM through tokens. Huawei understands this dilemma and has partnered with LLM providers, such as DeepSeek and Qwen, to offer an on-premise LLM solution.

The Huawei “AICC Appliance” combines all the hardware and software necessary to run a contact center platform on-premise or in a private cloud. This includes network, server, and storage components from Huawei, the standalone LLM Service Node and LLM engine, and the Huawei AICC contact center software solution.

Because the AICC Appliance is installed onsite, it not only avoids the token cost, but also stores sensitive client information locally, provides high availability, and enables easy deployment. For training and the operation of the LLM and the AICC software, Huawei provides local experts and consulting services.

Huawei has over 30 years of experience in the contact center industry, and for the past 10 years, has had the leading market share for contact center software in China, where AICC is used for customers with >20k agents. Many global customers rely on AICC to provide an excellent customer experience, streamline the contact center operation, and reduce operational expenses.

AICC is available both as an On-Premise as well as SaaS solution. Customers who are interested in AICC can contact the local Huawei office.

Learn more about the Huawei AICC solution.

Further Reading

1. MWC 2025 Highlights: The Huawei AI-Powered Contact Center (AICC)

2. Redefining Customer Experience with Video on Huawei AICC

3. How AICC Can Reshape Your Customer Services

Disclaimer: Any views and/or opinions expressed in this post by individual authors or contributors are their personal views and/or opinions and do not necessarily reflect the views and/or opinions of Huawei Technologies.

Leave a Comment